Backup3G 3.2.6/User Guide/Backup Jobs

This page was last modified 01:49, 9 August 2007.From Documentation

Current revision

Backup3G provides a flexible environment for implementing a policy-based backup strategy.

You define your backup jobs by assembling them from reusable components, which are stored centrally in several tables. For example, there are tables of backup items, backup methods, and backup jobs.

How you configure your backup jobs and which backup methods you choose will be determined by the nature of your data, your available hardware, and your operations and network environment.

This section discusses:

- Planning your backup and recovery strategies

- Preparing to use backup3G

- backup3G components

- Attributes of backup jobs—what is backed up, how it is backed up, and the format it is written in

Contents |

Planning Your Backup Policies and Procedures

There is no universal backup scheme that is best for all sites, and backup3G does not try to impose a standard strategy on you. However, backup3G does make it simpler to implement your backup procedures, as it lets you build backup jobs from reusable components.

Backup3G also provides a convenient means of documenting your procedures at any point, as information about all your backup jobs is stored centrally in backup3G tables.

See How to Implement Policy Based Management and the Sample Policy and Procedures Manual for some examples of policies and procedures relating to backup and media management.

Planning Backup Policies

Following is a list of factors that may influence your backup strategy:

- the nature of the data—are there special factors such as legal, audit, confidentiality or data integrity requirements? How long should each backup be retained?

- the cost to the organization if some files cannot be recovered quickly or at all—for example business lost during down time

- are backups intended more to provide full recovery after a system failure, or selected file recovery after user error? If the latter, use online indexes and multipart backup methods to speed recovery.

- what procedures should be followed to recover from a major system failure? Where will the procedures be stored and are staff aware of them?

- which files are static and which change regularly? Will filesystems be static while backups are in progress?

- your current and future network configuration—e.g. will backups be done centrally, or locally on distributed hosts?

- where should backups be stored in the short and long term? There should be a mix of onsite locations, for speed and convenience of recovery, and offsite locations, for safety in the event of a disaster affecting your main media library.

- what backup drives, auto-loading devices and media types are available? Are there limitations in terms of media capacity, I/O and network throughput, or the time available to run backups? Is there a requirement for multi-volume backups? The answers to these questions indicate how many drives or stackers you should have.

- business and operations environment—for example, is there a need for round-the-clock availability? What regular (daily | weekly | monthly | quarterly | yearly) processing cycles and operations shifts apply?

- what is the preferred backup format and method—e.g. cpio? dump?

- is interface to third-party software (e.g. Oracle, Windows), or to another knowledge base necessary for backup and recovery?

- should an online index of all or some backups be maintained to speed recovery?

Designing Backup Procedures to Aid Data Recovery

This topic suggests some good practices to help you recover data quickly and reliably.

Develop and test disaster recovery procedures

Prepare a recovery plan for potential disasters. Don’t focus on events such as fire or flood—focus on effects such as loss of a particular filesystem, disk, host, tape drive, network connection, or data center. Allow for the possibility that key staff or computing facilities may be unavailable.

Document procedures and contact numbers so that staff can quickly find and use them when required.

Test your disaster recovery procedures using different scenarios, including combinations of problems. Perform a ‘post-mortem’ on each test. Correct any flaws found in the procedures. Document and distribute the test results to key managers and enduser representatives.

Back up the backup3G database last

Design your backups so that the COSmanager directories, including backup3G, are backed up in the last step of the last job run each night. This will ensure that the media contents table on the tape is up-to-date if you have to recover backup3G itself.

The media contents table is stored in the db directory under backup3G’s home directory.

Keep hard copy printouts of media contents

By default, backup jobs have an At-unload cmd that runs the medprint command. medprint prints a hard copy of the media catalog, or the contents of the media set. File each printout somewhere onsite in a safe location. If you lose the media database you can search these paper copies to find the media set and tape file containing the most recent copy of backup3G. If the media set is sent for storage offsite, send a paper copy of the media catalog with it.

Check backup logs and verify backups

Make someone responsible for checking the backup logs every day. Operations staff should be alerted to any backup step that returns a non-zero exit status. Rerun any failed backup steps as soon as possible.

From time to time you should verify that backup data is recoverable. Select a few backups (especially older ones) and test recovering some files. A good way to verify indexed backups is to delete the original index and have backup3G recreate it by reading the media set.

Follow recommended media handling practices

Define procedures for handling and storing backup media and check to see that they are being followed. These procedures should include:

Media lifecycle: Tapes are cheap—lost data is expensive.

- Use high-quality data-grade media.

- Don’t keep using tapes until they fail. Define a maximum number of uses and retire tapes when they reach this limit.

- Monitor backup logs to check for I/O errors. An increase in transient errors may indicate that the drive requires cleaning or maintenance that the tape is near the end of its useful life.

Storage and handling: when not in use, tapes should be kept in their plastic case and stored vertically—that is, resting on the long narrow edge.

The physical environment: tapes and drives will last longer in a clean environment where the temperature and humidity are kept within the ranges recommended by the manufacturer, and kept constant.

- In areas where media are stored, handled, or used, bar potential contaminants such as food, drink, and smoke.

- Don’t put tape units near printers or photocopiers, as paper dust and toner will damage drive heads and tapes.

Acclimation: If tapes come from another location such as an offsite storage facility, try to allow twenty-four hours for them to adjust to the new temperature conditions. This may not be possible if the tapes are needed for recovery.

Identification: tapes should be physically labeled with the media number. When a media set is sent to or returned from offsite storage, a paper copy of the media catalog listing the tapes’ contents should be sent with it.

Clean your tape drives regularly

Backup drives should be cleaned regularly, otherwise dust and other deposits will build up on the heads, leading to increased error rates and reduced drive performance.

Tape drives should be cleaned with a cleaning tape at least once a month, and much more often where they are heavily used or where the physical environment (temperature, humidity, dust) is not optimal.

The drive manufacturer will have more detailed information on how to extend the reliability and working life of your drives.

Preparing to Use backup3G

Backup3G is installed at your site – now you want to run your normal backups. What happens next?

Backup3G comes with some standard components for defining backup jobs. You can add new backups from predefined components through the Maintain backup details option.

There is an easier way to create a generic set of backup jobs. The initial configuration process leads you through a step-by-step procedure to generate a simple backup scheme for each filesystem. For some smaller sites this may be all that is required. Larger organizations can extend the basic scheme by customizing and adding to the job schedule. See Generate Simple Backup Scheme on page 149.

Together, the predefined components and the simple backup scheme form the skeleton of a complete backup system.

Your next task is to flesh out this skeleton by adding details of the components and procedures that are specific to your site. We strongly recommend that you take some time to review your backup procedures. See Planning Your Backup Policies and Procedures on page 20.

These details are stored centrally in backup3G’s database, rather than being duplicated in shell-scripts and applications throughout the network. This means that new details and changes (for example, a new tape drive) only need to be entered once.

You may not need to do some of the following steps, as many of the components and site-specific details will have been defined when backup3G was installed.

- If the supplied set of media details is not sufficient for your needs, enter details, including media types, label types and offsite storage locations. See How To Read and Maintain Media Details on page 116 for more details.

Note that it is important to add the capacity and partition size for each media type that will be used to write multi-part backups. - Define details of all removable media drives on the network that will be used to read or write to a backup volume.

- Define backup items for all the directories and filesystems that you plan to backup on the network.

- Define any retention periods you wish to use that aren’t already defined in backup3G.

- Define backup jobs corresponding to all your current and planned backups.

Backup Components

In backup3G, your backup strategy comprises backup jobs which are assembled from a set of reusable components.

Backup Jobs

The backup job defines the ‘policy’, that is, it specifies the schedule of the job, the drive to be used, the retention period and, if applicable, the offsite location. A backup job comprises one or more backup steps; each step usually backs up one item. The job can also perform pre- or post-processing commands, for example to shut down a database, or e-mail a completion message.

Further information on backup jobs and an insight into how they are configured is available in How to Define a Backup Job.

Backup Items

A backup item comprises a data object (a filesystem, directory, disk partition, or database) and the method that will be used to back it up. You can also pass optional flags and arguments that are supported by the backup method. See How to Define a Backup Item for more information and see To Define the Steps in a Backup Job for an illustration of the use of backup items.

Backup Methods

Backup methods contain the commands used to write the backup, and describe whether attributes such as online indexes, multi-part backups and remote backups are supported. backup3G includes several predefined methods, based on standard UNIX archiving commands such as cpio, dump and tar. See Appendix C— Backup Methods and Drivers. You can add new backup methods based on your existing shell scripts and third-party backup software—see Appendix D—Defining Backup Methods.

Removing and Renaming Backup Job Components

Generally, you can freely define, reuse, rename and remove backup components. However there are a couple of situations in which backup3G will step in to maintain the integrity of your backup procedures.

- A backup item can’t be removed or renamed while there is still a backup step that refers to it.

- If a backup job is renamed or removed, all the steps that belong to it are also renamed or removed.

What is Backed Up?

What is the Data Object

The data object is the thing to be backed up: a filesystem or directory tree, a disk partition, or a database. The data object is specified in the Host and Object fields of the backup item.

Filesystems and directories

Backup3G has several backup methods, based on cpio, dump, and tar, for backing up standard UNIX filesystems. You can back up:

- all files in the filesystem (full backup)

- only files that have changed since the last full backup (incremental backup)

- a directory and all its files and subdirectories

You can also supply a list of files and directories to be included in or excluded from the backup—see Selected Backup: Including and Excluding Files.

A ‘full-plus-incrementals’ backup strategy may be necessary if there isn’t enough time available to perform full backups as often as is required. With modern highspeed, high-capacity tape drives this strategy is less common.

Disk partitions or database files

The ‘image copy’ backup methods can be used to back up a single very large file or raw disk partition. The main difference from a normal backup is that in the backup item, you use either the single file name or the UNIX raw disk device name instead of a directory name in the Object field.

For example, some DBMS products optionally use raw partitions for database extents, rather than normal UNIX filesystems. To do a regular backup of a large database partition, create a new backup item using the method ‘image copy - MP’.

In the Object field, put the raw device name of the disk partition that contains the database. Example: /dev/rdsk/c0d0s4.

Oracle databases

Backup3G has an add-on module called DA-Oracle that performs backup and restore of Oracle databases. DA-Oracle supports:

- offline backup of an Oracle instance

- online backup of an Oracle instance or selected tablespaces

- export of an Oracle instance or selected tablespaces

- archive and backup of redo log files

- full or partial restore of backed-up Oracle objects.

See the DA-Oracle User Guide for full details.

Is the Data on a UNIX host or a Windows host

Backup3G is available for nearly all commercial UNIX and Linux platforms, including operating systems from Sun, Hewlett-Packard, IBM, SCO, Silicon Graphics, Unisys, Red Hat, Debian and others.

Backup3G looks and works the same on all these UNIX systems, so you can configure and manage backups without worrying about platform-specific details.

Backup for Windows systems is supported through Enterprise Windows Clients. See Install/Deinstall Enterprise Backup Support.

- Note

- you must back up NFS-cross-mounted filesystems on the host where they reside.

Back Up the Whole Filesystem or Selected Files

By default, the ‘full cpio’ backup methods back up all files under the base directory. In a selected backup, you can pass a list of files and file name patterns that are to be included in or excluded from the backup.

- Note

- Selected backups don’t update the .FSbackup file, so you can’t use selected backups with a ‘full plus incremental’ backup strategy.

Selected Backup: Including and Excluding Files

You pass the file names to the backup method through the Options field in the backup item. The Options field can either contain the actual list of files names and patterns, or it can contain the name of a file that the backup command reads to get the file names to be excluded or included.

The syntax is as follows:

- -s <files>

- back up only files matching these patterns under the base directory

- -S <file>

- same as -s, but read <file> to get the list of file names and patterns

- -x <files>

- back up files under the base directory except those matching these patterns

- -X <file>

- same as -x, but read <file> to get the list of file names and patterns to be excluded from the backup

The list of files can include any number of file names and shell file patterns, each separated by a space.

Use -s or -S to split a large directory. Use -x or -X to exclude files that you never want to recover. For example, you can use the two in combination: use selected backup to backup certain files in a directory, then do a full backup excluding the files in the selected backup.

Use -S or -X when the list of files and patterns is too long to fit in the Options field, or if you have a standard set of files that you want to use in several backup items.

- Note

- Backup3G does not verify the list of files when you enter the backup item. The list is passed to the backup command at run time, at which time any file patterns are expanded.

Deleting Backup

There is a potential problem with a backup strategy that relies on a full backup followed by incremental backups. Files that were correctly deleted or renamed after the full backup was taken will be recreated when you restore the full backup.

The solution is to use a backup method that supports deleting backup.

Deleting backup: mail folders example

On Monday night, a full backup is taken of your mail folders, comprising the Mail directory and its subdirectories. On Tuesday you delete some messages from your inbox and file others in different folders. On Tuesday night an incremental backup is done, containing messages that arrived in your inbox or were changed or refiled since the full backup. On Wednesday morning there is a disk failure that corrupts your mail directories. You recover using Monday’s full backup and Tuesday’s incremental backup.

All the files that were present on Monday night are restored to their original directories. Files changed or added since the full backup are restored from the incremental backup. Together, these have the desired effect of recovering every file in the filesystem at the time of Tuesday night’s incremental backup. The problem is that some extra files will also be recovered that weren’t present on Tuesday night: the ones you deleted or refiled on Tuesday.

How ‘deleting backup’ works

Restoring from a deleting backup ignores files that were present at the time of a full backup, but were removed or renamed before a later incremental backup. All of backup3G’s cpio backup methods support deleting backup and restore via the -d flag. It works as follows:

The full backup writes a list of all files backed up to a file called .FSbackup. When the incremental backup runs, it prepares a similar list of all the files in the directory. Any files present in both lists are appended to .FSbackup.del. This is the deletion list. Any files that are not also present in .FSbackup are marked to be backed up, including those renamed but not modified.

The job then backs up all the modified files, as for any incremental backup, then backs up .FSbackup.del. Finally, .FSbackup.del is deleted from the disk, so the only copy is on the backup volume.

- Note

- In both cases an extra pass over both files is required before the backup starts, so a deleting backup takes slightly longer.

After a full recovery, if .FSbackup.del was recovered, all the files listed in it are removed, then .FSbackup.del itself is removed. This effectively removes from the recovered directory files that were present at the time of the full backup that had

been removed or renamed before the incremental backup. The renamed files appear as new files to the incremental backup and so are backed up under their new name.

By default, deleting backup is enabled in each cpio backup method. You can disable deleting backup by removing the -d flag in the Backup command field of the backup method.

How is it Backed Up?

Single Volume or Multi-volume

If the total amount of data to be written in all steps exceeds the capacity of a single volume, the backup can be split over as many volumes as are requested: this is called a multi-volume backup. A multi-volume backup job can be made up of several singlepart backup steps, or one or more multi-part steps, or a combination of the two.

The set of backup volumes that contain a multi-volume backup is called a media set. Each volume is identified by a Set ID and Sequence number. The Set ID is the media number of the first volume, and the sequence number is the position of the volume in the set.

If a multi-volume backup job encounters a write error or unexpected end-of-tape, the incomplete part is rewritten from the start of a new volume.

Single-part Backup Step or Multi-part Backup Step

Backup3G supports multi-part backup steps. Normally, each backup step writes to a single file on the backup volume. A multi-part backup is a single step that writes to the output medium in several parts, for example:

- to split very large files which exceed the capacity of a single tape

- to back up a raw disk partition (e.g. a database)

- to speed recovery of selected files in a large filesystem.

Part size

Tape drives are much faster at seeking than reading. If an indexed backup is split into several parts, the recovery program can seek directly to the part containing the next requested file. For example, if you want to recover a single file and the part size of the backup is 200 MB, backup3G skips over any preceding parts, and reads at most the 200 MB part containing the selected file. Recovering a selected file using this method would take 4 to 5 minutes.

The ‘part size’ and total capacity of each output medium are defined in the media type table. See Capacity and Part Size on page 161 to find out how to determine the most efficient part size and capacity.

Each part corresponds to a physical file on the output volume. The sequence number is appended to the Comments field for each volume in the media set – for example: Backup of /usr directory - Part 3 of 5.

Scheduled, Automatic, or At-request Backup

Scheduled and automatic backups run to a regular schedule. Automatic backups start at the scheduled time without having to be initiated by an operator. With scheduled backups, the operator is prompted at the scheduled time to initiate the job. Scheduled backups usually require some action, such as manually loading a tape.

Backup3G can schedule backups to a daily, weekly, or monthly cycle. If more advanced schedules are required, you can use duty3G to implement complex scheduling and inter-task dependencies.

At-request backups are run only when initiated by an operator, and not to any regular schedule.

All backup jobs, whether submitted from backup3G, duty3G, or cron, are run from the FSbackup command.

The FSbackup Command

FSbackup schedules or runs a backup job that has been pre-configured in backup3G. Backups can be run in one of four ways:

- scheduled

- runs the job later (generally overnight or on the weekend)

- interactive

- starts the job at once and progressively displays job output on the screen

- background

- starts the job at once and doesn’t tie up the user’s screen (preferable for long-running jobs).

- immediate

- runs the job at once, with no interaction from the user. This mode should be used for backups that are run by cron or from an automatic duty (see the duty3G User Guide).

In all modes, job output is written to the Backup log file.

If duty3G is installed, the Duty menu option under Maintain backup jobs > Jobs generates a duty to run an FSbackup command using information defined in the backup job. You can override many of the default values, including the output drive, retention period and run time. If duty3G is not installed, the Duty option is not available.

See the FSbackup(1) manual page for full details of the syntax and available options.

One-off Archive or Reusable Backup Job

Backup jobs are stored in backup3G and can be run repeatedly and modified or cloned.

You can also perform a one-off archive of selected directories. Archive jobs can be quickly defined and run but are not saved and so can’t be reused.

You can choose to have the directories removed after the archive has completed. See How To Archive Files and Directories on page 99.

Appending Backups

You can write a backup to the end of a media set that was created by another backup job. The advantages of appending backups are:

- you can use the full capacity of large tapes

- you can keep related backups together.

Appending backups are specified in the Append to field of the backup job details. if Append to is blank, the job will write to a new media set every time it runs.

How appending backups work

Example: JobB appends to JobA. When JobB runs it looks in the media database for the current media set for JobA. If the current set is found, backup3G loads the last volume in the set, scans to the end-of-data marker, and starts writing data from JobB. If for any reason the current set can not be loaded (because it is at a different location, for example) the job will start a new media set.

Notes

- The expiry date of the media set is the latest date of any job on the media set.

- If you keep appending new backups to a media set it will never expire. To break the chain, move the media set out of the load location—e.g. keep appending a weeks backups to tapes in a stacker, then move the media set from the stacker to another location.

- You can’t scratch a single backup or single volume—scratching a media set scratches all backups and volumes in the set.

- We recommend you use the COSstdtape label type for tapes that are used for appending backups (see To add a media type on page 124). COSstdtape writes an end-of-data marker to make certain that later backups do not overwrite data from earlier ones.

Following are some examples of appending backup strategies.

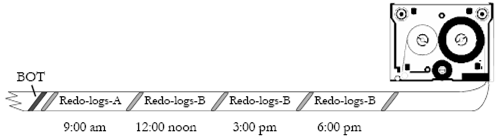

Example: frequent backup of database archive logs

Many DBMS users require archive logs to be archived to tape and then deleted from disk, perhaps several times a day. The logs are generally small relative to the capacity of a tape. For speed of restoration and efficient use of tapes the backups should be kept together.

Steps

Define an automatic backup called Redo-logs-A to back up the current set of redo logs. Schedule it to run at 9:00 am each day.

Create a clone of Redo-logs-A and call it Redo-logs-B. Schedule it to run at 12:00, 3:00 pm, and 6:00 pm each day.

In the job details for Redo-logs-A set Append to to null. Each time Redo-logs-A runs it will start a new media set.

In the job details for Redo-logs-B set Append to to Redo-logs-A. Each time Redo-logs-B runs it will write to the media set started by Redo-logs-A that morning, so each days redo logs will be stored on one media set.

| Job name | Run at | Append to job |

|---|---|---|

| Redo-logs-A Redo-logs-B | 9:00 12:00,15:00,18:00 | — Redo-logs-A |

- Figure 5 — Back up database redo logs

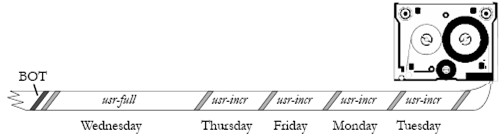

Example: group related backups on one media set

Perform a full backup of /usr each Wednesday, and an incremental backup of /usr on the other week nights. Write each weekly cycle to one media set.

Steps

Define a job usr-full to do a full backup of /usr, and schedule it to run every Wednesday.

Define another job usr-incr to do an incremental backup of /usr, and schedule it to run on Thursday, Friday, Monday, and Tuesday.

In the job details for usr-full set Append to to null. Each time usr-full runs it will start a new media set.

In the job details for usr-incr set Append to to usr-full. Each time usr-incr runs it will write to the end of the current media set created by usr-full.

| Job name | Run on | Append to job |

|---|---|---|

| usr-full usr-incr usr-incr usr-incr usr-incr | Wednesday Thursday Friday Monday Tuesday | — usr-full usr-full usr-full usr-full |

- Figure 6 — Append incremental backups to weekly full backup

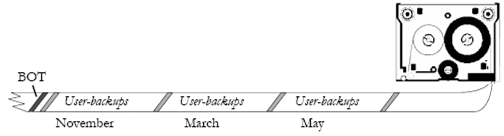

Example: accumulate user backups

Perform occasional user backups to a single media set.

Steps

Define an at-request backup called User-backups to archive selected user directories. In the job details for User-backups set Append to to User-backups.

The first time User-backups runs it will start a new media set. After that it will keep appending to the same media set until the media set is moved to another location.

| Job name | Run | Append to job |

|---|---|---|

| User-backups | At-request | User-backups |

- Figure 7 — Write occasional user backups to a media set

What Format is it Written In?

Format of Backup: cpio, dump, tar, image

Backup3G includes four backup drivers: FSdump, FScpio, FSfilesys and FStar. These drivers write data using the standard utilities dump, cpio and tar.

Use of the backup3G backup drivers is recommended. If you add new backup and recovery methods that don’t use the these drivers, you must add index support yourself if you wish to be able to create indexes for your backups.

Data Compression

There are four compressed backup methods:

- full zcpio

- full zcpio - MP

- incremental zcpio

- incremental zcpio - MP

The only difference from the standard cpio methods is that the output is piped to compress before being written. This has the advantages that less space is required on the output volume, and network backups are faster, since the compression is done on the file-host before the data are sent over the network. A potential disadvantage is that the backup will run slower if the machine is heavily loaded or there are many concurrent backup jobs.

Normally backup3G calculates how much data can be written to each volume. When data compression is used this can’t be done accurately, as the compression ratio varies depending on the type of files. The solution is to specify a smaller part size for the output media type. Backup3G simply keeps writing parts out until it reaches end-of-volume, then requests a new volume and keeps writing.

Note that the same applies to non-compressed methods writing to drives that perform hardware compression.

- Note

- Compression and decompression take time, especially with large blocks of data. For efficient backup and retrieval, it is better not to use compression if the initial data blocks are very large. For example, it will take longer to compress a 1 GB filesystem than it would simply to back it up to tape.

Index Method: Standard, ls_index Method, or No Index

To allow faster recovery of selected files, a compressed index of all the directories and files in a backup can be stored on disk. This index may be created when the backup job is run, or the details may be extracted later by reading all the files in the media set. Indexes can be deleted later to save disk space without affecting the backup itself.

Indexes can be enabled or disabled for each step in a backup job.

Which backup methods support online indexes

All backup methods based on the backup drivers FScpio, FSdump, FStar, or FSfilesys can create online indexes. Note that FSdump can only create an index at the time the backup job runs.

Three other conditions are necessary for an online index to be created for a particular backup item:

- The Index field for the backup method must be set to ‘yes’. This indicates that index support is enabled for this backup method.

- The Index field for the backup item must be set to ‘yes’. This indicates that you want to create an index for this backup item.

- The recovery method that will be used to recover files from this backup must include an Index command. This command writes file details such as size, date and time last modified, owner and permissions to a compressed disk file.

- Note

- backup3G cannot correctly index a file name that contains a Tab. File names containing other special characters such as ampersands (&) and quotes (' ") will be indexed correctly.

Creating Indexes Faster With ls_index

FSfilesys supports an alternate method of creating an index. ls_index gets file details by doing an ls -l of the data before starting the backup. This is the same method used by FSdump; the dump command itself doesn’t support extracting a list of file names from a backup.

On UNIX systems with slow pipe/FIFO performance, this should produce indexes faster. Note that if a file changes after the ls command is run but before it is backed up, its index entry will contain the ‘old’ details.

Creating an Index for an Existing Backup

You can create an online index even if this wasn’t done when the backup was taken. The only condition is that you must use a backup driver such as FScpio that can generate an index by reading the files on the backup volume.

When you select a backup in the Recover module (Recover > Contents > Open), backup3G lists the contents of the backup. If you select an item that doesn’t have an index, you can create one (Index > Create). Backup3G prompts you to load the backup volume, and generates an index. You can then display the contents of the backup or recover files selectively based on the index.